Introduction

DevOps has been a driving force in accelerated development delivery and improved quality outcomes for a few years now. Our mid-range colleagues have been championing this approach for a while, and there’s lots of good reasons why the mainframe teams should be picking this up and running with it as well:

- Code quality verification

- Automated build

- Test automation

- Automated (consistent) deployment

- Monitoring and feedback

- Continuous delivery / continuous integration

Here’s a view (a couple of years old now) of the DevOps lifecycle tools market:

This one was produced by Atlassian – makers of Confluence and Jira. Note that any version of this diagram showing tools will include Jenkins (in this case, at the top in the middle). Why is that?

What is Jenkins?

Conceived in Sun (now Oracle) as Hudson by Kohsuke Kawaguchi, Jenkins started life as an automated build server. Having developed and grown in both Oracle and the open source community, Hudson forked in 2011 with the open source branch becoming Jenkins.

Jenkins has a single master server with one or more slave agents, which can execute on other machines or on the local machine with the master. The elements that make Jenkins stand out from other automation servers are:

- Very active open source solution. A strong developer community with good documentation

- Extensive plugin library (c. 1400) providing integration with a huge range of tools and services

- Groovy (a scripting form of Java) driven pipelines (processes)

- REST API allowing pipelines to be driven remotely, and information to be fed back to Jenkins from external sources

The plugins enable integration with other software, e.g.:

- With the GitHub plugin enabled, Jenkins can be triggered to run a pipeline when code is checked in. It can pull the code and deliver it to the build server and drive the build process.

- With the SonarQube plugin enabled, it can check the quality of the delivered code as well.

- With the Selenium plugin enabled, it can test the resulting web application and report the results

Pipelines are written in Groovy, and can either be scripted directly into the pipeline, or (better!) delivered in a file in the application SCM. This ensures that the build, test and deployment Jenkins configuration is under coordinated code control with the application it represents.

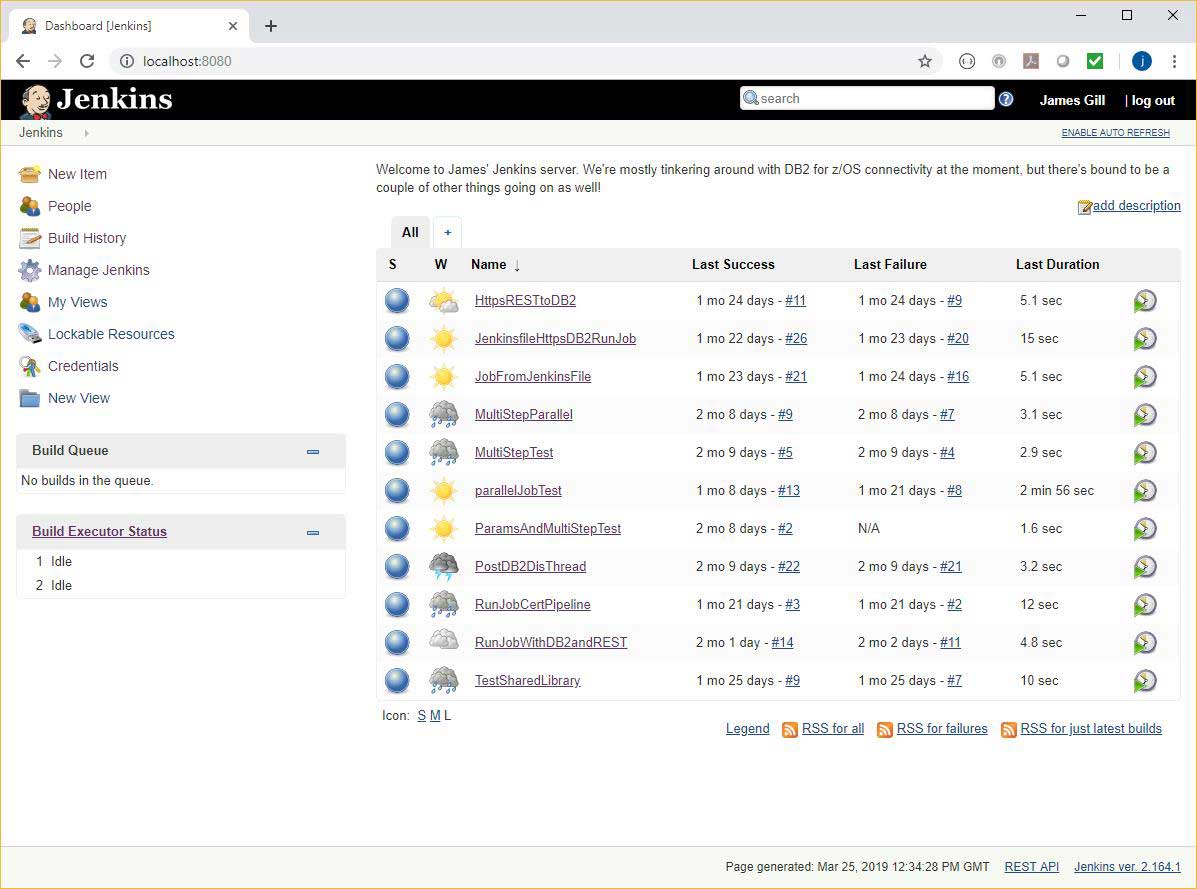

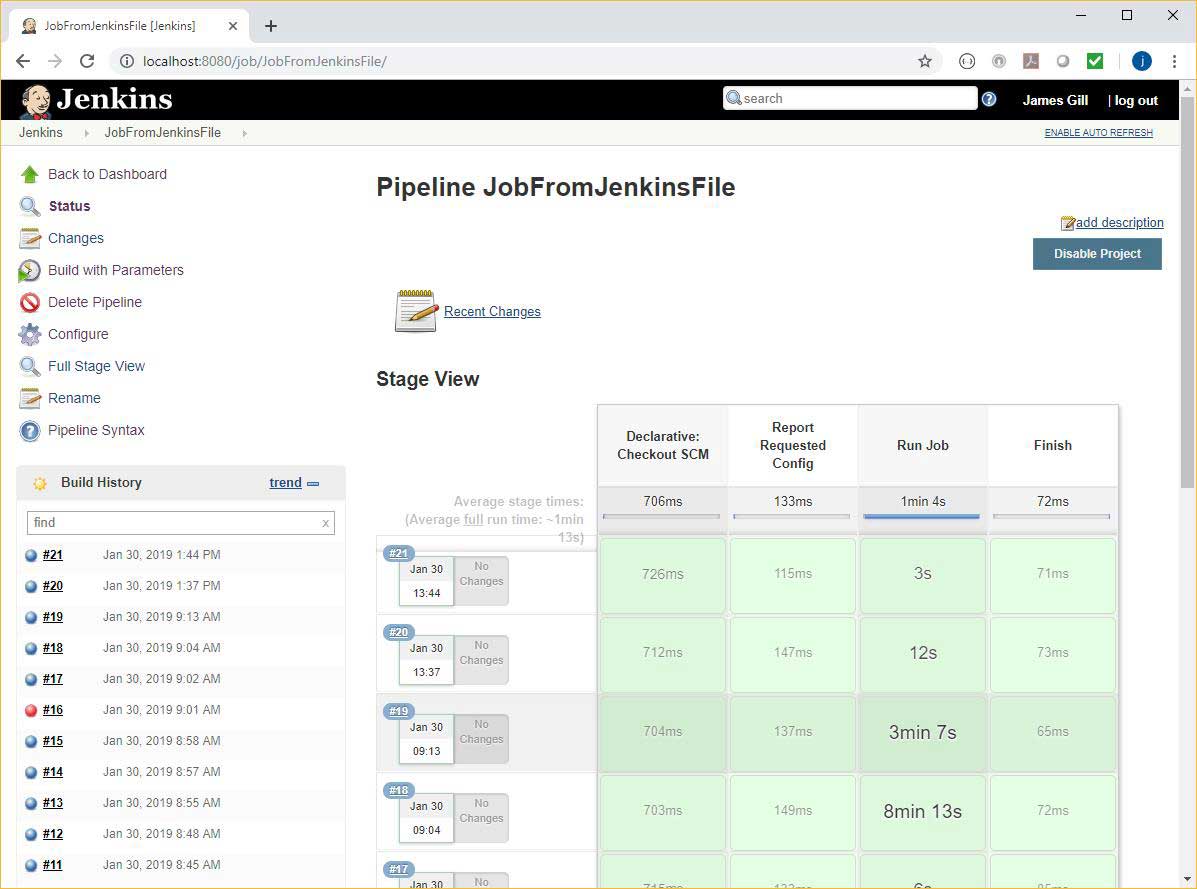

The following are screenshots from one of our Jenkins test servers:

1 – The Jenkins dashboard, showing a list of the currently defined projects/pipelines

2 – Looking at one of the pipeline views, we can see recent executions and timings from the different pipeline stages:

Why Should You Care?

With its huge number of available integration plugins, Jenkins can provide automation for almost any solution, regardless of the scope of the technical infrastructure. Business applications are rarely single platform solutions these days, and coordinating the build, test and deploy processes across multiple environments is a challenge Jenkins was built to manage.

Pros and Cons

Things that we liked:

- Out of the box capabilities, especially when combined with:

- Huge number of plugins, extending the reach and integration capabilities

- Groovy (scripting based) pipelines

- REST API to allow information feedback and pipeline triggering

- Active open source project with good online documentation, examples and support

Things we don’t like

- The current z/OS batch job plugin uses FTP with JESINTERFACELEVEL = 1 or 2. The Security team will not be keen as FTP is a plain text protocol (exposing userid and password on the network) and JESINTERFACELEVEL allows batch job submission and output retrieval over FTP.

- You can run a z/OS Jenkins slave, but this executes in USS (Unix System Services), rather than as a general MVS started task. This can limit the capability.

Conclusions

We really like Jenkins. What we need now is a way to submit and track z/OS batch jobs that won’t make the security team excitable.

In the next blog on Jenkins and z/OS, we’ll take a look at Groovy pipelines and how we can structure these to run tasks.